"Markov" (Machine Learning Method)

- Method for Classify.

- Model class probabilities using the n-gram frequencies of the given sequence.

Details & Suboptions

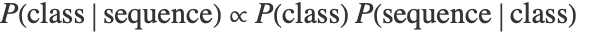

- In a Markov model, at training time, an n-gram language model is computed for each class. At test time, the probability for each class is computed according to Bayes's theorem,

, where

, where  is given by the language model of the given class and

is given by the language model of the given class and  is class prior.

is class prior. - The following options can be given:

-

"AdditiveSmoothing" .1 the smoothing parameter to use "MinimumTokenCount" Automatic minimum count for an n-gram to to be considered "Order" Automatic n-gram length - When "Order"n, the method partitions sequences in (n+1)-grams.

- When "Order"0, the method uses unigrams (single tokens). The model can then be called a unigram model or naive Bayes model.

- The value of "AdditiveSmoothing" is added to all n-gram counts. It is used to regularize the language model.