represents a softmax net layer.

SoftmaxLayer[n]

represents a softmax net layer that uses level n as the normalization dimension.

SoftmaxLayer

represents a softmax net layer.

SoftmaxLayer[n]

represents a softmax net layer that uses level n as the normalization dimension.

Details and Options

- SoftmaxLayer[…][input] explicitly computes the output for input.

- SoftmaxLayer[…][{input1,input2,…}] explicitly computes outputs for each of the inputi.

- When given a NumericArray as input, the output will be a NumericArray.

- SoftmaxLayer is typically used inside NetChain, NetGraph, etc. to normalize the output of other layers in order to use them as class probabilities for classification tasks.

- SoftmaxLayer can operate on arrays that contain "Varying" dimensions.

- SoftmaxLayer exposes the following ports for use in NetGraph etc.:

-

"Input" a numerical array of dimensions d1×d2×…×dn "Output" a numerical array of dimensions d1×d2×…×dn - When it cannot be inferred from other layers in a larger net, the option "Input"->n can be used to fix the input dimensions of SoftmaxLayer.

- SoftmaxLayer[] is equivalent to SoftmaxLayer[-1].

- SoftmaxLayer effectively normalizes the exponential of the input array, producing vectors that sum to 1. For the default level of -1, the innermost dimension is used as the normalization dimension.

- When SoftmaxLayer[-1] is applied to a vector v, it produces the vector Normalize[Exp[v],Total]. When applied to an array of higher dimension, it is mapped onto level -1.

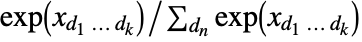

- When SoftmaxLayer[n] is applied to a k-dimensional input array

, it produces the array

, it produces the array  , where n is the summed-over index of x.

, where n is the summed-over index of x. - SoftmaxLayer[…,"Input"shape] allows the shape of the input to be specified. Possible forms for shape are:

-

n a vector of size n {d1,d2,…} an array of dimensions d1×d2×… {"Varying",d2,d3,…} an array whose first dimension is variable and remaining dimensions are d2×d3×… - Options[SoftmaxLayer] gives the list of default options to construct the layer. Options[SoftmaxLayer[…]] gives the list of default options to evaluate the layer on some data.

- Information[SoftmaxLayer[…]] gives a report about the layer.

- Information[SoftmaxLayer[…],prop] gives the value of the property prop of SoftmaxLayer[…]. Possible properties are the same as for NetGraph.

Examples

open all close allBasic Examples (2)

Create a SoftmaxLayer:

Create a SoftmaxLayer that takes a vector of length 5 as input:

Scope (5)

Create a SoftmaxLayer that takes a matrix of dimensions 3×2 as input:

Each row of the matrix is normalized:

Create a SoftmaxLayer that uses the first dimension as the normalization dimension:

Create a SoftmaxLayer that normalizes over a variable-length dimension:

Apply it to sequences of different lengths:

SoftmaxLayer threads over a batch of inputs:

Create a SoftmaxLayer that uses a NetDecoder to interpret the output as class probabilities:

Interpret the outputs of the softmax layer as probabilities:

Properties & Relations (3)

SoftmaxLayer[-1] computes the following:

The dimension used as the normalization dimension cannot be 1, as this always normalizes to a constant array:

SoftmaxLayer cannot accept symbolic inputs:

Neat Examples (1)

Create a set of three instances of SoftmaxLayer that take and return an RGB image, but that normalize on the color channel dimension, the height and the width dimension, respectively:

Tech Notes

Related Guides

Text

Wolfram Research (2016), SoftmaxLayer, Wolfram Language function, https://reference.wolfram.com/language/ref/SoftmaxLayer.html (updated 2018).

CMS

Wolfram Language. 2016. "SoftmaxLayer." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2018. https://reference.wolfram.com/language/ref/SoftmaxLayer.html.

APA

Wolfram Language. (2016). SoftmaxLayer. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/SoftmaxLayer.html

BibTeX

@misc{reference.wolfram_2025_softmaxlayer, author="Wolfram Research", title="{SoftmaxLayer}", year="2018", howpublished="\url{https://reference.wolfram.com/language/ref/SoftmaxLayer.html}", note=[Accessed: 04-March-2026]}

BibLaTeX

@online{reference.wolfram_2025_softmaxlayer, organization={Wolfram Research}, title={SoftmaxLayer}, year={2018}, url={https://reference.wolfram.com/language/ref/SoftmaxLayer.html}, note=[Accessed: 04-March-2026]}