LocalResponseNormalizationLayer[]

represents a net layer that normalizes its input by averaging across neighboring input channels.

LocalResponseNormalizationLayer

LocalResponseNormalizationLayer[]

represents a net layer that normalizes its input by averaging across neighboring input channels.

Details and Options

- The following optional parameters can be included:

-

"Alpha" 1.0 scaling parameter "Beta" 0.5 power parameter "Bias" 1.0 bias parameter "ChannelWindowSize" 2 number of channels to average over - LocalResponseNormalizationLayer[…][input] explicitly computes the output from applying the layer.

- LocalResponseNormalizationLayer[…][{input1,input2,…}] explicitly computes outputs for each of the inputi.

- When given a NumericArray as input, the output will be a NumericArray.

- LocalResponseNormalizationLayer is typically used inside NetChain, NetGraph, etc.

- LocalResponseNormalizationLayer exposes the following ports for use in NetGraph etc.:

-

"Input" a rank-3 array "Output" a rank-3 array - When it cannot be inferred from other layers in a larger net, the option "Input"->{n1,n2,n3} can be used to fix the input dimensions of LocalResponseNormalizationLayer.

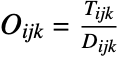

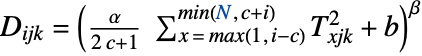

- The output array

is obtained via

is obtained via  , where

, where  , Tijk is the input,

, Tijk is the input,  is the "Alpha" parameter,

is the "Alpha" parameter,  is the "Beta" parameter,

is the "Beta" parameter,  is the "ChannelWindowSize" and

is the "ChannelWindowSize" and  is the number of channels in the input Tijk.

is the number of channels in the input Tijk. - Options[LocalResponseNormalizationLayer] gives the list of default options to construct the layer. Options[LocalResponseNormalizationLayer[…]] gives the list of default options to evaluate the layer on some data.

- Information[LocalResponseNormalizationLayer[…]] gives a report about the layer.

- Information[LocalResponseNormalizationLayer[…],prop] gives the value of the property prop of LocalResponseNormalizationLayer[…]. Possible properties are the same as for NetGraph.

Examples

open all close allBasic Examples (2)

Create a LocalResponseNormalizationLayer:

Create a LocalResponseNormalizationLayer with input dimensions specified:

Scope (1)

Ports (1)

Create a LocalResponseNormalizationLayer with input dimensions specified:

Tech Notes

Related Guides

History

Text

Wolfram Research (2017), LocalResponseNormalizationLayer, Wolfram Language function, https://reference.wolfram.com/language/ref/LocalResponseNormalizationLayer.html.

CMS

Wolfram Language. 2017. "LocalResponseNormalizationLayer." Wolfram Language & System Documentation Center. Wolfram Research. https://reference.wolfram.com/language/ref/LocalResponseNormalizationLayer.html.

APA

Wolfram Language. (2017). LocalResponseNormalizationLayer. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/LocalResponseNormalizationLayer.html

BibTeX

@misc{reference.wolfram_2025_localresponsenormalizationlayer, author="Wolfram Research", title="{LocalResponseNormalizationLayer}", year="2017", howpublished="\url{https://reference.wolfram.com/language/ref/LocalResponseNormalizationLayer.html}", note=[Accessed: 13-December-2025]}

BibLaTeX

@online{reference.wolfram_2025_localresponsenormalizationlayer, organization={Wolfram Research}, title={LocalResponseNormalizationLayer}, year={2017}, url={https://reference.wolfram.com/language/ref/LocalResponseNormalizationLayer.html}, note=[Accessed: 13-December-2025]}