"LogisticRegression" (Machine Learning Method)

- Method for Classify.

- Models class probabilities with logistic functions of linear combinations of features.

Details & Suboptions

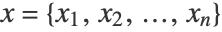

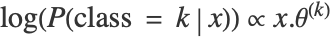

- "LogisticRegression" models the log probabilities of each class with a linear combination of numerical features

,

,  , where

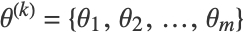

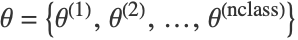

, where  corresponds to the parameters for class k. The estimation of the parameter matrix

corresponds to the parameters for class k. The estimation of the parameter matrix  is done by minimizing the loss function

is done by minimizing the loss function ![sum_(i=1)^m-log(P_(theta)(class=y_i|x_i))+lambda_1 sum_(i=1)^nTemplateBox[{{theta, _, i}}, Abs]+(lambda_2)/2 sum_(i=1)^ntheta_i^2 sum_(i=1)^m-log(P_(theta)(class=y_i|x_i))+lambda_1 sum_(i=1)^nTemplateBox[{{theta, _, i}}, Abs]+(lambda_2)/2 sum_(i=1)^ntheta_i^2](Files/LogisticRegression.en/5.png) .

. - The following options can be given:

-

"L1Regularization" 0 value of  in the loss function

in the loss function"L2Regularization" Automatic value of  in the loss function

in the loss function"OptimizationMethod" Automatic what method to use - Possible settings for "OptimizationMethod" include:

-

"LBFGS" limited memory Broyden–Fletcher–Goldfarb–Shanno algorithm "StochasticGradientDescent" stochastic gradient method "Newton" Newton method