"NaiveBayes" (Machine Learning Method)

- Method for Classify.

- Determines the class using Bayes's theorem and assuming that features are independent given the class.

Details & Suboptions

- Naive Bayes is a classification technique based on Bayes's theorem

which assumes that the features

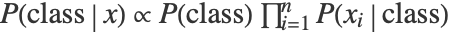

which assumes that the features  are independent given the class. The class probabilities for a given example are then:

are independent given the class. The class probabilities for a given example are then:  , where

, where  is the probability distribution of feature

is the probability distribution of feature  given the class, and

given the class, and  is the prior probability of the class. Both distributions are estimated from the training data. In the current implementation, distributions are modeled using a piecewise-constant function (i.e a variable-width histogram).

is the prior probability of the class. Both distributions are estimated from the training data. In the current implementation, distributions are modeled using a piecewise-constant function (i.e a variable-width histogram). - The following suboption can be given

-

"SmoothingParameter" .2 regularization parameter

Examples

open all close allBasic Examples (2)

Options (2)

"SmoothingParameter" (2)

Train a classifier using the "SmoothingParameter" suboption:

Train several classifiers on the "FisherIris" dataset by using different settings of the "SmoothingParameter" option:

Evaluate these classifiers on a data point that is unlike points from the training set and compare the class probability for class "setosa":