Performance of Linear Algebra Computation

This tutorial covers issues related to performance.

Packed Arrays

One of the challenges of building Wolfram Language is to make sure that the system is general enough to support symbolic computation and fast enough so that numerical computation is efficient. These and other goals are described under "Design Principles of Wolfram Language". One technique that Wolfram Language provides is to use specialized representations for important types of Wolfram Language expression. In the case of linear algebra this involves what is known as Packed Array Technology, and it was first introduced in version 4.0.

The first vector uses less memory and supports faster computations because it is built from a packed array. Packed arrays are a representation for rectangular tensors of machine integers, machine reals, and complex numbers with real and imaginary parts that are machine reals.

Packed Array Functions

A number of functions for working with packed arrays are summarized.

| Developer`PackedArrayQ[expr] | return True if expr is a packed array |

| Developer`ToPackedArray[expr] | convert expr to a packed array if possible |

| Developer`ToPackedArray[expr,t] | convert expr to a packed array of type t if possible |

| Developer`FromPackedArray[expr] | convert expr from a packed array |

| Developer`PackedArrayForm[expr] | print packed arrays in expr in a summary form |

Packed Array Operations

Many functions can work more efficiently with packed arrays compared with unpacked lists, but the results will be the same, whether a packed or an unpacked version is used. In order to maintain consistency, the system must, on occasion, unpack a packed array. Because this is a potential source of inefficiency, a message is available to let you know that something has become unpacked. You can enable this message with a system option.

Packed Array Summary

To make use of packed arrays, it is typically hard to exploit functions such as Developer`ToPackedArray. The main issue is to make sure that you have a uniformity of elements in your matrices; for example, that you are not mixing integers and reals.

Programming Efficiency

This section discusses techniques for writing efficient Wolfram Language programs that solve linear algebra problems.

Measuring Performance

If you want to study the performance of your Wolfram Language code there are a number of timing related functions you can use.

| Timing[expr] | evaluate expr and return a list of the CPU time needed, together with the result obtained |

| AbsoluteTiming[expr] | evaluate expr giving the absolute time taken |

Timing Wolfram Language operations.

Another useful function is TimeConstrained.

| TimeConstrained[expr,t] | try to evaluate expr, aborting the calculation after t seconds |

| TimeConstrained[expr,t,failexpr] | return failexpr if the time constraint is not met |

Vectorizing Loops

A common operation involves some type of iteration over the elements of a matrix. Because the matrices may be large it is obviously important to do this efficiently.

One of the most important ways to write efficient code is to avoid explicit part references, especially in inner loops. This is a major source of inefficiency in user code and can be avoided by using functions that work on all the elements of a matrix or a vector at once. These vectorized operations allow Wolfram Language to use highly optimized code that runs faster.

List Creation

This problem involves creating a list and then initializing it with certain values. For example, computing

v〚i〛 ⟹ Sin[i]

One way to do this would be to create the list and then to use a For loop that iterates over its elements to set them to a value.

n = 10;

v = Table[0, {n}];

For[i = 1, i <= n, i++, v[[i]] = Sin[2.0 Pi i]];

Slow way to initialize a list.

It is much faster to create the list and initialize it all at once; it is also neater.

n = 10;

v = Table[ Sin[2.0 Pi i], {i, 1, n}]

Faster way to initialize a list.

An even faster way is to use the optimized function Range to create the list and then to use vectorized operations.

n = 10;

v = Sin[2.0 Pi Range[1., n]]

An even faster way to initialize a list.

Commands such as Table and Range are very optimized for building lists and matrices. They are discussed under "Building Matrices".

List Updating

This problem involves updating the elements of a list; for example, computing the Sin of every element.

v〚i〛 ⟹ Sin[v〚i〛]

One way to do this involves iterating over the elements of the list replacing each with the updated version.

Do[v[[i]] = Sin[v[[i]]], {i, n}];

It is much faster to compute the new values by using a vectorized operation. The code is also neater and tidier.

v = Sin[v]

Another form of updating requires another argument that is not constant across the list.

v〚i〛 ⟹ v〚i〛/i^2

One way to do this involves iterating over the elements of the list, dividing each by the square of the index.

n = Length[v];

Do[ v[[i]] = v[[i]]/i^2 , {i, n}];

It is faster to compute the list of updates and then carry out a vectorized division.

d=Range[n]^2;

v=v/d

The use of listable operations is discussed under "Listability".

Using Built-in Support

Wolfram Language has a very wide range of functions, including many for list processing that have important applications for writing matrix computations. Before you start to work it is worth looking to see if any of the built-in functions will do what you want. For example, if what you are doing involves a convolution or correlation then you should use the appropriate functions; they will almost certainly be faster.

Another important point to remember is that Wolfram Language can represent a wide range of mathematical objects. For example, Wolfram Language can work with the actual linear equations that matrices are used to represent. Sometimes the equational form can be more expressive and convenient and this can lead to greater efficiency. The topic is discussed under "Converting Equations to Sparse Arrays".

One area of built-in functions with many applications for linear algebra computations are those for structural manipulation of lists. Wolfram Language functions such as Part, Take, Drop, Transpose, PadLeft, PadRight, RotateLeft, and RotateRight are all heavily optimized and have many applications. These are discussed in more detail under "Structural Operations". It is usually good if you can write your computations to use these functions.

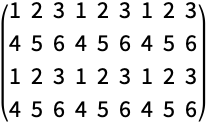

A worked example will now be given that discusses different techniques to extend a matrix by tiling copies. For example, the input matrix

should be padded out to return the following matrix.

Three different ways to solve the problem are demonstrated.

A Slow Way

This way suffers from several speed deficiencies, the most severe being the inner loops over the part indices. These would be much faster if they were replaced by vectorized code. It was explained in "Measuring Performance" that explicit part references in an inner loop can lead to inefficient code.

A Faster Way

This method is much faster; it uses the function Part, which is very flexible and heavily optimized. It is described further under "Getting Pieces of a Matrix".

Also Fast but Neater

This method is also neater and is probably the fastest for small input matrices. For large matrices, it is similar to the speed of the Part example already shown. The use of PadRight is described under "Extending Matrices".

Matrix Contents

Matrices in Wolfram Language can be constructed from all the different types of object that Wolfram Language holds. They can contain machine-precision floating-point numbers, arbitrary-precision floating-point numbers, complex floating-point numbers, integers, rational numbers, and general symbolic quantities. The different types are discussed under "Matrix Types".

That Wolfram Language can work with these different types of matrix is a great strength of the system. However, it has a number of implications for the efficiency of numerical floating-point computation: first from a mix of symbolic and numerical entries, secondly from a mix of different types of numbers, and thirdly from integer matrices.

Mixed Symbolic/Numerical Matrices

If you mix symbolic and numerical elements in a matrix, the linear algebra functions will treat the matrix as symbolic as discussed under "Mixed Mode Matrices". It should be noted that techniques for working with symbolic matrices are typically slower than the heavily optimized techniques available for numerical matrices.

The solution is to make sure that your matrices only contain numbers.

Mixed Numerical Type Matrices

If you mix different types of numbers in your matrices the linear algebra functions will treat the matrix as numerical and will coerce the matrix to the lowest precision. This is discussed under "Mixed Mode Matrices". For linear algebra functions this will not have as severe an impact on performance as when working with symbolic matrices (this was demonstrated in the previous section).

The solution to this problem is to make sure you initialize with real numbers if you are going to work with real numbers.

Integer Matrices

Of course, it is a strength of Wolfram Language that it can return the exact result for a computation involving an integer matrix. But it should be noted that many purely numerical systems would return the approximate result.

The solution is that to work with matrices of approximate real numbers, you should start with approximate real numbers.

Expression Efficiency

"Matrices as Wolfram Language Expressions" explained that Wolfram Language represents matrices as expressions, a uniform data type that is used throughout the system. It described the advantages that came from this uniformity in the system. There are a number of efficiency implications that arise from the use of Wolfram Language expressions. This section discusses these.

Updating of Matrices

If you use the vectorizing operations described previously, this is a good way to avoid the iteration over the matrix and minimize the number of copying operations. If you really cannot avoid iterating over the matrix, it is a good idea to keep the loop as simple and tidy as possible so that you can avoid extra copies of the matrix.

The time for the overall process will scale with the square of the length of the list for the technique that has to copy at every step. With no copying the time will scale linearly. The longer the input list, the greater the impact, and the performance of the code will start to degrade.

Appending to Matrices

In Wolfram Language, expressions are implemented as arrays. This allows fast access to any particular elements of an expression. Because matrices are regular Wolfram Language expressions, they are also arrays. While arrays are very fast for accessing elements, they are slower for adding elements. Typically this requires a copy, and it should be avoided. The example that follows shows the cost of adding elements.

The time for the overall process will scale with the square of the length of the list for the technique that has to copy at every step. With no copying the time will scale linearly. The longer the input list, the greater the impact, and the performance of the code will start to degrade.