Fit

Details and Options

- Fit is also known as linear regression or least squares fit. With regularization, it is also known as LASSO and ridge regression.

- Fit is typically used for fitting combinations of functions to data, including polynomials and exponentials. It provides one of the simplest ways to get a model from data.

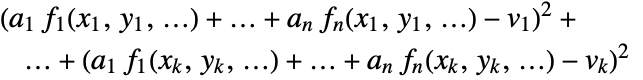

- The best fit minimizes the sum of squares

.

. - The data can have the following forms:

-

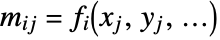

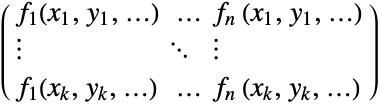

{v1,…,vn} equivalent to {{1,v1},…,{n,vn}} {{x1,v1},…,{xn,vn}} univariate data with values vi at coordinates xi {{x1,y1,v1},…} bivariate data with values vi and coordinates {xi,yi} {{x1,y1,…,v1},…} multivariate data with values vi at coordinates {xi,yi,…} - The design matrix m has elements that come from evaluating the functions at the coordinates,

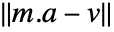

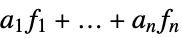

. In matrix notation, the best fit minimizes the norm

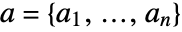

. In matrix notation, the best fit minimizes the norm  where

where  and

and  .

. - The functions fi should depend only on the variables {x,y,…}.

- The possible fit properties "prop" include:

-

"BasisFunctions" funs the basis functions "BestFit"

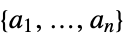

the best fit linear combination of basis functions "BestFitParameters"

the vector  that gives the best fit

that gives the best fit"Coordinates" {{x1,y1,…},…} the coordinates of vars in data "Data" data the data "DesignMatrix" m

"FitResiduals"

the differences between the model and the fit at the coordinates "Function" Function[{x,y,…},a1 f1+…+an fn] best fit pure function "PredictedResponse"

fitted values for the data coordinates "Response"

the response vector  from the input data

from the input data{"prop1","prop2",…} several fit properties - Fit takes the following options:

-

NormFunction Norm measure of the deviations to minimize FitRegularization None regularization for the fit parameters

WorkingPrecision Automatic the precision to use - With NormFunction->normf and FitRegularization->rfun, Fit finds the coefficient vector a that minimizes normf[{a.f(x1,y1,…)-v1,…,a.f(xk,yk,…)-vk}] + rfun[a].

- The setting for NormFunction can be given in the following forms:

-

normf a function normf that is applied to the deviations {"Penalty", pf} sum of the penalty function pf applied to each component of the deviations {"HuberPenalty",α} sum of Huber penalty function for each component {"DeadzoneLinearPenalty",α} sum of deadzone linear penalty function for each component - The setting for FitRegularization may be given in the following forms:

-

None no regularization rfun regularize with rfun[a] {"Tikhonov",λ} regularize with

{"LASSO",λ} regularize with

{"Variation",λ} regularize with ![lambda||TemplateBox[{Differences, paclet:ref/Differences}, RefLink, BaseStyle -> {2ColumnTableMod}][a]||^2 lambda||TemplateBox[{Differences, paclet:ref/Differences}, RefLink, BaseStyle -> {2ColumnTableMod}][a]||^2](Files/Fit.en/19.png)

{"TotalVariation",λ} regularize with ![lambda||TemplateBox[{Differences, paclet:ref/Differences}, RefLink, BaseStyle -> {2ColumnTableMod}][a]||_1 lambda||TemplateBox[{Differences, paclet:ref/Differences}, RefLink, BaseStyle -> {2ColumnTableMod}][a]||_1](Files/Fit.en/20.png)

{"Curvature",λ} regularize with ![lambda||TemplateBox[{Differences, paclet:ref/Differences}, RefLink, BaseStyle -> {2ColumnTableMod}][a,2]||^2 lambda||TemplateBox[{Differences, paclet:ref/Differences}, RefLink, BaseStyle -> {2ColumnTableMod}][a,2]||^2](Files/Fit.en/21.png)

{r1,r2,…} regularize with the sum of terms from r1,… - With WorkingPrecision->Automatic, exact numbers given as input to Fit are converted to approximate numbers with machine precision.

Examples

open all close allBasic Examples (2)

Scope (2)

Here is some data defined with exact values:

Fit the data to a linear combination of sine functions using machine arithmetic:

Fit the data using 24-digit precision arithmetic:

Here is some data in three dimensions:

Find the plane that best fits the data:

Show the plane with the data points:

Generalizations & Extensions (1)

Options (6)

FitRegularization (2)

Tikhonov regularization controls the size of the best fit parameters:

Without the regularization, the coefficients are quite large:

This can also be referred to by "L2" or "RidgeRegression":

LASSO (least absolute shrinkage and selection operator) regularization selects the most significant basis functions and gives some control of the size of the best fit parameters:

Without the regularization, the coefficients are quite large:

Applications (6)

Compute a high-degree polynomial fit to data using a Chebyshev basis:

Use regularization to stabilize the numerical solution ![]() to

to ![]() where

where ![]() is perturbed:

is perturbed:

The solution found by LinearSolve has very large entries:

Regularized solutions stay much closer to the solution of the unperturbed problem:

Use variation regularization to find a smooth approximation for an input signal:

The output target is a step function:

The input is determined from the output via convolution with ![]() :

:

Without regularization, the predicated response matches the signal very closely, but the computed input has a lot of oscillation:

With regularization of the variation, a much smoother approximation can be found:

Regularization of the input size may also be included:

Smooth a corrupted signal using variation regularization:

Plot the tradeoff between the norm of the residuals and the norm of the variation:

Choose a value of ![]() near where the curve bends sharply:

near where the curve bends sharply:

Use total variation regularization to smooth a corrupted signal with jumps:

Regularize with the parameter ![]() :

:

A smaller value of ![]() gives less smoothing but the residual norm is smaller:

gives less smoothing but the residual norm is smaller:

Use LASSO (L1) regularization to find a sparse fit (basis pursuit):

The goal is to approximate the signal with just of few of the thousands of Gabor basis functions:

With ![]() , a fit is found using only 41 of the basis elements:

, a fit is found using only 41 of the basis elements:

Once the important elements of the basis have been found, error can be reduced by finding the least squares fit to these elements:

With a smaller value ![]() , a fit is found using more of the basis elements:

, a fit is found using more of the basis elements:

Properties & Relations (5)

Fit gives the best fit function:

LinearModelFit allows for extraction of additional information about the fitting:

Extract additional results and diagnostics:

This is the sum of squares error for a line a+b x:

Find the minimum symbolically:

These are the coefficients given by Fit:

The exact coefficients may be found by using WorkingPrecision->Infinity:

This is the sum of squares error for a quadratic a+b x+c x^2:

Find the minimum symbolically:

These are the coefficients given by Fit:

When a polynomial fit is done to a high enough degree, Fit returns the interpolating polynomial:

The result is consistent with that given by InterpolatingPolynomial:

Fit will use the time stamps of a TimeSeries as variables:

Rescale the time stamps and fit again:

Fit acts pathwise on a multipath TemporalData:

Possible Issues (2)

Here is some data from a random perturbation of a Gaussian:

This is a function that gives the standard basis for polynomials:

Show the fits computed for successively higher-degree polynomials:

The problem is that the coefficients get very small for higher powers:

Giving the basis in terms of scaled and shifted values helps with this problem:

Reconstruct a sparse signal from compressed sensing data:

Compressed sensing consists of multiplying the signal with an ![]() matrix with

matrix with ![]() so only data of length

so only data of length ![]() has to be transmitted. If

has to be transmitted. If ![]() is large enough, a random matrix with independently identically distributed normal entries will satisfy the restricted isometry property, and the original signal can be recovered with very high probability:

is large enough, a random matrix with independently identically distributed normal entries will satisfy the restricted isometry property, and the original signal can be recovered with very high probability:

The samples to send are constructed by multiplication of the signal with the matrix ![]() :

:

Reconstruction can be done by minimizing ![]() for all possible solutions of

for all possible solutions of ![]() :

:

Even though the minimization is solved by linear optimization, it is relatively slow because all the constraints are equality constraints. The solution can be found much faster using basis pursuit (L1 regularization):

This gives the basis elements. To find the best solution, solve the linear equations corresponding to those components:

See Also

FindFit LinearModelFit NonlinearModelFit DesignMatrix LeastSquares PseudoInverse Interpolation InterpolatingPolynomial Solve ListPlot ConvexOptimization

Function Repository: QuantileRegression

Related Links

History

Introduced in 1988 (1.0) | Updated in 2019 (12.0)

Text

Wolfram Research (1988), Fit, Wolfram Language function, https://reference.wolfram.com/language/ref/Fit.html (updated 2019).

CMS

Wolfram Language. 1988. "Fit." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2019. https://reference.wolfram.com/language/ref/Fit.html.

APA

Wolfram Language. (1988). Fit. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/Fit.html

BibTeX

@misc{reference.wolfram_2025_fit, author="Wolfram Research", title="{Fit}", year="2019", howpublished="\url{https://reference.wolfram.com/language/ref/Fit.html}", note=[Accessed: 07-February-2026]}

BibLaTeX

@online{reference.wolfram_2025_fit, organization={Wolfram Research}, title={Fit}, year={2019}, url={https://reference.wolfram.com/language/ref/Fit.html}, note=[Accessed: 07-February-2026]}