"NeighborhoodContraction" (Machine Learning Method)

- Method for FindClusters, ClusterClassify and ClusteringComponents.

- Partitions data into clusters of similar elements using the "NeighborhoodContraction" clustering algorithm.

Details & Suboptions

- "NeighborhoodContraction" is a neighbor-based clustering method. "NeighborhoodContraction" works for arbitrary cluster shapes and sizes, however, it can fail when clusters have different densities or are intertwined.

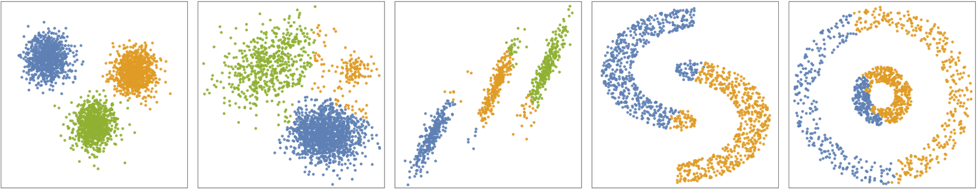

- The following plots show the results of the "NeighborhoodContraction" method applied to toy datasets:

-

- The "NeighborhoodContraction" method iteratively shifts data points toward higher density regions. During this procedure, data points tend to collapse to different fixed points, each of them representing a cluster.

- Formally, at each step, each data point

is set to the mean of its neighboring points

is set to the mean of its neighboring points  ,

,  .

. - Neighboring points

are defined as all the points within a ball of ϵ radius. The algorithm repeats the updates until points stop moving; all points belonging to a cluster are then collapsed (up to a tolerance). This algorithm is equivalent to the "MeanShift" method but with a different neighborhood definition.

are defined as all the points within a ball of ϵ radius. The algorithm repeats the updates until points stop moving; all points belonging to a cluster are then collapsed (up to a tolerance). This algorithm is equivalent to the "MeanShift" method but with a different neighborhood definition. - The following suboption can be given:

-

"NeighborhoodRadius" Automatic radius ϵ

Examples

open all close allBasic Examples (3)

Find clusters of nearby values using the "NeighborhoodContraction" clustering method:

Train the ClassifierFunction on a list of colors using the "NeighborhoodContraction" method:

Gather the elements by their class number:

Train a ClassifierFunction on a mixture of two-dimensional normal distributions:

Options (3)

DistanceFunction (2)

Generate a list of 200 random colors:

Find clusters by specifying a DistanceFunction option:

Generate points based on a mixture of two-dimensional normal distributions:

Find different clustering structures by specifying different DistanceFunction options: