-

See Also

- LearnDistribution

- LearnedDistribution

- MultinormalDistribution

- NormalDistribution

- MixtureDistribution

- AnomalyDetection

- SynthesizeMissingValues

- RandomVariate

- RarerProbability

- FindClusters

- ClusterClassify

- ClusteringComponents

- ClusteringTree

- Dendrogram

- DimensionReduction

-

- Methods

- Multinormal

- KernelDensityEstimation

- ContingencyTable

- Agglomerate

- DBSCAN

- JarvisPatrick

- KMeans

- KMedoids

- MeanShift

- NeighborhoodContraction

- Spectral

- Tech Notes

-

-

See Also

- LearnDistribution

- LearnedDistribution

- MultinormalDistribution

- NormalDistribution

- MixtureDistribution

- AnomalyDetection

- SynthesizeMissingValues

- RandomVariate

- RarerProbability

- FindClusters

- ClusterClassify

- ClusteringComponents

- ClusteringTree

- Dendrogram

- DimensionReduction

-

- Methods

- Multinormal

- KernelDensityEstimation

- ContingencyTable

- Agglomerate

- DBSCAN

- JarvisPatrick

- KMeans

- KMedoids

- MeanShift

- NeighborhoodContraction

- Spectral

- Tech Notes

-

See Also

"GaussianMixture" (Machine Learning Method)

- Method for LearnDistribution, FindClusters, ClusterClassify and ClusteringComponents.

- Models probability density with a mixture of Gaussian (normal) distributions.

Details & Suboptions

- In both LearnDistribution and clustering functions, "GaussianMixture" models the probability density of a numeric space using a mixture of multivariate normal distribution. Each Gaussian is defined by its mean and covariance matrix, as defined in the "Multinormal" method.

- For clustering functions, each normal distribution represents a cluster component. Each data point is assigned to the component for which the probability density is the highest.

- When used in LearnDistribution, the following options can be given:

-

"CovarianceType" Automatic type of constraint on the covariance matrices "ComponentsNumber" Automatic number of Gaussians MaxIterations 100 maximum number of expectation-maximization iterations - Possible settings for "CovarianceType" include:

-

"Diagonal" only diagonal elements are learned (the others are set to 0) "Full" all elements are learned "FullShared" each Gaussian shares the same full covariance "Spherical" only diagonal elements are learned and are set to be equal - Except when "CovarianceType""FullShared", the covariance matrices can differ from each other.

- Information[LearnedDistribution[…],"MethodOption"] can be used to extract the values of options chosen by the automation system.

- LearnDistribution[…,FeatureExtractor"Minimal"] can be used to remove most preprocessing and directly access the method.

- For clustering functions, when the number of clusters is not specified, a Bayesian inference method is used to automatically decide the number of clusters. The method uses DirichletDistribution prior over the mixing coefficients and WishartDistribution prior over the component's mean and covariance. This method works for arbitrary cluster sizes and densities. However, it is sensitive to initialization parameters, and can fail when clusters are intertwined or anisotropic.

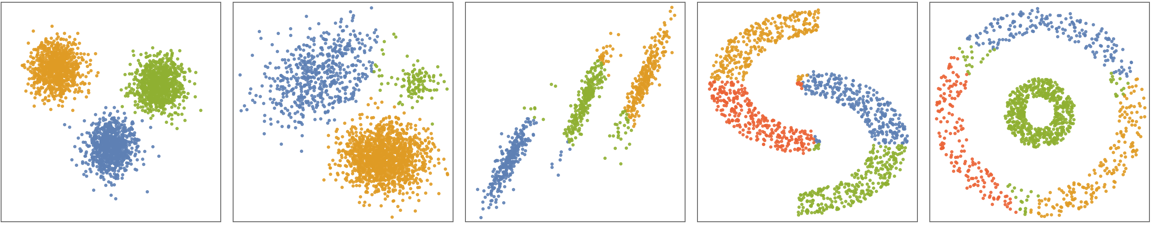

- The following plots show the results of the "GaussianMixture" clustering method applied to toy datasets:

-

Examples

open all close allBasic Examples (5)

Train a Gaussian mixture distribution on a numeric dataset:

Look at the distribution Information:

Obtain an option value directly:

Compute the probability density for a new example:

Plot the PDF along with the training data:

Generate and visualize new samples:

Find clusters of random 2D vectors as identified by the "GaussianMixture":

Find clusters of similar values using the "GaussianMixture" method:

Train a Gaussian mixture distribution on a two-dimensional dataset:

Plot the PDF along with the training data:

Use SynthesizeMissingValues to impute missing values using the learned distribution:

Train a Gaussian mixture distribution on a nominal dataset:

Because of the necessary preprocessing, the PDF computation is not exact:

Use ComputeUncertainty to obtain the uncertainty on the result:

Increase MaxIterations to improve the estimation precision:

Scope (1)

Find an array of cluster indices using ClusteringComponents:

Options (3)

"ComponentsNumber" (1)

"CovarianceType" (1)

Train a "GaussianMixture" distribution with a "Full" covariance:

Evaluate the PDF of the distribution at a specific point:

Visualize the PDF obtained after training a mixture of two Gaussians with covariance types "Full", "Diagonal", "Spherical" and "FullShared":

Perform the same operation but with an automatic number of Gaussians for each covariance type:

Possible Issues (1)

Create and visualize noisy 2D moon-shaped training and test datasets:

Train a ClassifierFunction using "GaussianMixture" and find cluster assignments in the test set:

Visualizing clusters indicates that "GaussianMixture" performs poorly on intertwined clusters:

See Also

LearnDistribution LearnedDistribution MultinormalDistribution NormalDistribution MixtureDistribution AnomalyDetection SynthesizeMissingValues RandomVariate PDF RarerProbability FindClusters ClusterClassify ClusteringComponents ClusteringTree Dendrogram DimensionReduction

Methods: Multinormal KernelDensityEstimation ContingencyTable Agglomerate DBSCAN JarvisPatrick KMeans KMedoids MeanShift NeighborhoodContraction Spectral