SecondOrderConeOptimization[f,cons,vars]

finds values of variables vars that minimize the linear objective f subject to second-order cone and/or linear constraints cons.

SecondOrderConeOptimization[c,{{a1,b1,α1,β1},…,{ak,bk,αk,βk}}]

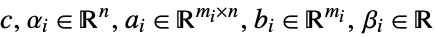

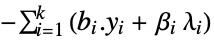

finds a vector ![]() that minimizes

that minimizes ![]() subject to the constraints

subject to the constraints ![]() .

.

SecondOrderConeOptimization[c,…,{dom1,dom2,…}]

takes ![]() to be in the domain domi, where domi is Integers or Reals.

to be in the domain domi, where domi is Integers or Reals.

SecondOrderConeOptimization[…,"prop"]

specifies what solution property "prop" should be returned.

SecondOrderConeOptimization

SecondOrderConeOptimization[f,cons,vars]

finds values of variables vars that minimize the linear objective f subject to second-order cone and/or linear constraints cons.

SecondOrderConeOptimization[c,{{a1,b1,α1,β1},…,{ak,bk,αk,βk}}]

finds a vector ![]() that minimizes

that minimizes ![]() subject to the constraints

subject to the constraints ![]() .

.

SecondOrderConeOptimization[c,…,{dom1,dom2,…}]

takes ![]() to be in the domain domi, where domi is Integers or Reals.

to be in the domain domi, where domi is Integers or Reals.

SecondOrderConeOptimization[…,"prop"]

specifies what solution property "prop" should be returned.

Details and Options

- Second-order cone optimization is also known as second-order cone programming (SOCP) and mixed-integer second-order cone programming (MISOCP).

- Second-order cone optimization is used in problems such as parameter fitting and geometric distance problems.

- Second-order cone optimization is a convex optimization problem that can be solved globally and efficiently with real, integer or complex variables.

- Second-order cone optimization finds

that solves the primal problem:

that solves the primal problem: -

minimize

subject to constraints ![TemplateBox[{{{{a, _, i}, ., x}, +, {b, _, i}}}, Norm]<=alpha_i.x+beta_i, i=1,..., k TemplateBox[{{{{a, _, i}, ., x}, +, {b, _, i}}}, Norm]<=alpha_i.x+beta_i, i=1,..., k](Files/SecondOrderConeOptimization.en/7.png)

where

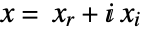

- Mixed-integer second-order cone optimization finds

and

and  that solve the problem:

that solve the problem: -

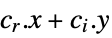

minimize

subject to constraints ![TemplateBox[{{{{a, _, {(, {j, _, r}, )}}, ., x}, +, {{a, _, {(, {j, _, r}, )}}, ., {yb, _, j}}}}, Norm]<=alpha_(j_r).x+alpha_(j_i).y+beta_j, j=1,..., k TemplateBox[{{{{a, _, {(, {j, _, r}, )}}, ., x}, +, {{a, _, {(, {j, _, r}, )}}, ., {yb, _, j}}}}, Norm]<=alpha_(j_r).x+alpha_(j_i).y+beta_j, j=1,..., k](Files/SecondOrderConeOptimization.en/12.png)

where

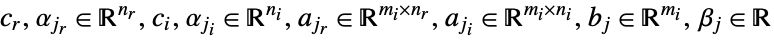

- When the objective function is real valued, SecondOrderConeOptimization solves problems with

![x in TemplateBox[{}, Complexes]^n x in TemplateBox[{}, Complexes]^n](Files/SecondOrderConeOptimization.en/14.png) by internally converting to real variables

by internally converting to real variables  , where

, where  and

and  .

. - The variable specification vars should be a list with elements giving variables in one of the following forms:

-

v variable with name  and dimensions inferred

and dimensions inferredv∈Reals real scalar variable v∈Integers integer scalar variable v∈Complexes complex scalar variable v∈ℛ vector variable restricted to the geometric region

v∈Vectors[n,dom] vector variable in  or

or

v∈Matrices[{m,n},dom] matrix variable in  or

or

- Note that linear constraints may be expressed using second-order constraints. For convenience, a single 0 is interpreted as needed as a matrix or vector with zero entries:

-

{ai,bi,0,0} linear equality constraints

{0,0,αi,βi} linear inequality constraint

- The constraints cons can be specified by:

-

LessEqual

scalar inequality GreaterEqual

scalar inequality VectorLessEqual

vector inequality VectorGreaterEqual

vector inequality Equal

scalar or vector equality Element

convex domain or region element - With SecondOrderConeOptimization[f,cons,vars], parameter equations of the form parval, where par is not in vars and val is numerical or an array with numerical values, may be included in the constraints to define parameters used in f or cons.

- The primal minimization problem has a related maximization problem that is the Lagrangian dual problem. The dual maximum value is always less than or equal to the primal minimum value, so it provides a lower bound. The dual maximizer provides information about the primal problem, including sensitivity of the minimum value to changes in the constraints.

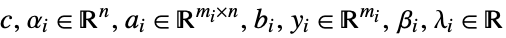

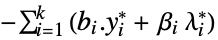

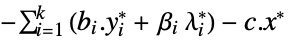

- The second-order cone optimization has a dual: » »

-

maximize

subject to constraints ![sum_(i=1)^k(a_i.y_i+alpha_ilambda_i)⩵c, ; TemplateBox[{{y, _, i}}, Norm]<=lambda_i, i=1,..., k sum_(i=1)^k(a_i.y_i+alpha_ilambda_i)⩵c, ; TemplateBox[{{y, _, i}}, Norm]<=lambda_i, i=1,..., k](Files/SecondOrderConeOptimization.en/34.png)

where

- For second-order cone optimization, strong duality always holds, meaning that if there is a solution to the primal minimization problem, then there is a solution to the dual maximization problem, and the dual maximum value is equal to the primal minimum value.

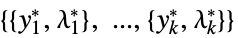

- The possible solution properties "prop" include:

-

"PrimalMinimizer"

a list of variable values that minimizes the objective function "PrimalMinimizerRules"

values for the variables vars={v1,…} that minimize

"PrimalMinimizerVector"

the vector that minimizes

"PrimalMinimumValue"

the minimum value

"DualMaximizer"

the maximizing dual vector "DualMaximumValue"

the dual maximizing value "DualityGap"

the difference between the dual and primal optimal values "Slack"

vector that converts inequality constraints to equality "ConstraintSensitivity"

sensitivity of  to constraint perturbations

to constraint perturbations"ObjectiveVector"

the linear objective vector "SecondOrderConeConstraints"

the list of coefficients for the constraints "LinearEqualityConstraints"

the linear equality constraint matrix and vector {"prop1","prop2",…} several solution properties - The following options may be given:

-

MaxIterations Automatic maximum number of iterations to use Method Automatic the method to use PerformanceGoal $PerformanceGoal aspects of performance to try to optimize Tolerance Automatic the tolerance to use for internal comparisons - The option Method->method may be used to specify the method to use. Available methods include:

-

Automatic choose the method automatically "SCS" SCS splitting conic solver "CSDP" CSDP semidefinite optimization solver "DSDP" DSDP semidefinite optimization solver "PolyhedralApproximation" approximate constraints using polyhedra "MOSEK" commercial MOSEK convex optimization solver "Gurobi" commercial Gurobi linear and quadratic optimization solver "Xpress" commercial Xpress linear and quadratic optimization solver - Computations are limited to MachinePrecision.

Examples

open all close allBasic Examples (4)

Minimize ![]() subject to the constraint

subject to the constraint ![]() :

:

The optimal point lies in a region defined by the constraints and where ![]() is smallest within the region:

is smallest within the region:

Minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

The optimal point lies on the smallest value of ![]() in a region bounded by the intersection of two disks:

in a region bounded by the intersection of two disks:

Minimize ![]() subject to the constraints

subject to the constraints ![]() . Specify the inputs in matrix-vector form:

. Specify the inputs in matrix-vector form:

Solve the problem using matrix-vector form:

Scope (27)

Basic Uses (9)

Minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Get the minimizing value using solution property "PrimalMinimiumValue":

Minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Get the minimizing value and minimizing vector using solution properties:

Minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Define the objective as ![]() and the constraints as

and the constraints as ![]() :

:

Solve using matrix-vector inputs:

Minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Define the objective as ![]() and the constraints as

and the constraints as ![]() :

:

Specify the equality constraint as:

Solve using matrix-vector inputs:

Minimize ![]() subject to the constraint

subject to the constraint ![]() using constant parameter equations for a and b:

using constant parameter equations for a and b:

Minimize ![]() subject to the constraint

subject to the constraint ![]() using vector variable

using vector variable ![]() :

:

Minimize ![]() subject to the constraint

subject to the constraint ![]() :

:

Specify the constraints using VectorGreaterEqual () and VectorLessEqual ():

Minimize ![]() subject to

subject to ![]() . Use Inactive[Plus] to avoid unintentional threading:

. Use Inactive[Plus] to avoid unintentional threading:

Specify the matrix ![]() and vector

and vector ![]() as parametrized equations to avoid using Inactive:

as parametrized equations to avoid using Inactive:

Minimize ![]() subject to

subject to ![]() . Use NonNegativeReals to specify constraint

. Use NonNegativeReals to specify constraint ![]() :

:

Integer Variables (4)

Specify integer domain constraints using Integers:

Specify integer domain constraints on vector variables using Vectors[n,Integers]:

Specify non-negative integer domain constraints using NonNegativeIntegers (![]() ):

):

Specify non-positive integer domain constraints using NonPositiveIntegers (![]() ):

):

Complex Variables (4)

Primal Model Properties (3)

Minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Get the primal minimizer as a vector:

Obtain the primal minimum value using symbolic inputs:

Extract the matrix-vector inputs of the optimization problem:

Solve using matrix-vector form:

Recover the symbolic primal value by adding the objective constant:

Find the slack ![]() associated with a minimization problem:

associated with a minimization problem:

Dual Model Properties (3)

The dual problem is to maximize ![]() subject to

subject to ![]() and

and ![]() :

:

Solve the resulting maximization problem:

The primal minimum value and the dual maximum value coincide because of strong duality:

That is the same as having a duality gap of zero. In general ![]() :

:

Construct the dual problem using constraint matrices extracted from the primal problem:

The dual problem is to maximize ![]() subject to

subject to ![]() and

and ![]() :

:

Solve the resulting maximization problem:

Get the dual maximum value directly using solution properties:

Sensitivity Properties (4)

Use "ConstraintSensitivity" to find the change in optimal value due to constraint relaxations:

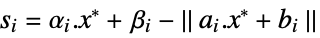

Each element of the sensitivity is ![]() , where

, where ![]() is a vector and

is a vector and ![]() is a scalar:

is a scalar:

Consider the new constraints ![]() , where

, where ![]() is the relaxation for constraint

is the relaxation for constraint ![]() :

:

The sensitivity element ![]() is associated with relaxation of

is associated with relaxation of ![]() . The expected new optimal value is:

. The expected new optimal value is:

Compare by directly solving the relaxed problem:

Consider the new constraints ![]() :

:

The sensitivity element ![]() is associated with relaxation of

is associated with relaxation of ![]() . The expected new optimal value is:

. The expected new optimal value is:

Compare by directly solving the relaxed problem:

Each sensitivity is associated with an inequality or equality constraint:

Extract the second-order cone constraints. The constraint ![]() is given as

is given as ![]() :

:

Linear constraints are given as ![]() . The associated sensitivity

. The associated sensitivity ![]() will have

will have ![]() :

:

The constraints and their associated sensitivities:

The change in optimal value due to constraint relaxation is proportional to the sensitivity value:

Compute the minimal value and constraint sensitivity:

A zero sensitivity will not change the optimal value if the constraint is relaxed:

A negative sensitivity will decrease the optimal value:

A positive sensitivity will increase the optimal value:

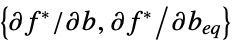

The "ConstraintSensitivity" is related to the dual maximizer of the problem:

The sensitivity ![]() satisfies

satisfies ![]() , where

, where ![]() is the dual inequality maximizer:

is the dual inequality maximizer:

Options (8)

Method (4)

The method "SCS" uses the splitting conic solver method:

"CSDP" and "DSDP" reduce to semidefinite optimization and use the interior point method:

"PolyhedralApproximation" approximates second-order constraints using polyhedra that have representations in terms of linear constraints:

The method "SCS" may give less accurate results than "CSDP" or "DSDP" due to relaxed tolerance:

Use a smaller tolerance to get better results for "SCS":

The method "PolyhedralApproximation" may have longer computation times than other methods:

Different methods may give different results for problems with more than one optimal solution:

PerformanceGoal (1)

Tolerance (3)

A smaller Tolerance setting gives a more precise result:

Find the error between the computed and exact minimum value using different Tolerance settings:

Visualize the change in minimum value error with respect to Tolerance:

A smaller Tolerance setting gives a more precise answer, but typically takes longer to compute:

A smaller tolerance takes longer:

The tighter tolerance gives a more precise answer:

Different methods have different tolerances, which affects the accuracy and precision:

Applications (35)

Basic Modeling Transformations (11)

Maximize ![]() subject to

subject to ![]() . Solve the maximization problem by negating the objective function:

. Solve the maximization problem by negating the objective function:

Negate the primal minimum value to get the corresponding maximum value:

Minimize ![]() subject to the constraints

subject to the constraints ![]() . Using the auxiliary variable

. Using the auxiliary variable ![]() , transform the problem to minimize

, transform the problem to minimize ![]() subject to

subject to ![]() :

:

Recover the original minimum value by performing direct substitution into ![]() :

:

Minimize ![]() subject to the constraints

subject to the constraints ![]() . Using the auxiliary variable

. Using the auxiliary variable ![]() , transform the problem to minimize

, transform the problem to minimize ![]() subject to

subject to ![]() :

:

Recover the original minimum value by performing direct substitution into ![]() :

:

Minimize ![]() subject to

subject to ![]() . Using two auxiliary variables

. Using two auxiliary variables ![]() , transform the problem to minimize

, transform the problem to minimize ![]() subject to

subject to ![]() :

:

Minimize ![]() subject to

subject to ![]() . Using the auxiliary variable

. Using the auxiliary variable ![]() , transform the problem to minimize

, transform the problem to minimize ![]() subject to

subject to ![]() :

:

Minimize ![]() subject to

subject to ![]() . For

. For ![]() ,

, ![]() is equivalent to

is equivalent to ![]() :

:

This is also equivalent to minimizing ![]() subject to

subject to ![]() :

:

SecondOrderConeOptimization directly does the transformation for Abs:

Minimize ![]() subject to the constraints

subject to the constraints ![]() . Using the auxiliary variable

. Using the auxiliary variable ![]() , transform the problem to minimize

, transform the problem to minimize ![]() subject to

subject to ![]() :

:

SecondOrderConeOptimization directly does the transformation for Abs:

Minimize ![]() . Using auxiliary variables

. Using auxiliary variables ![]() , convert the problem to minimize

, convert the problem to minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Directly using Abs in the constraints will be faster and more accurate:

Minimize ![]() . Using auxiliary variable

. Using auxiliary variable ![]() , convert the problem to minimize

, convert the problem to minimize ![]() subject to the constraints

subject to the constraints ![]() :

:

Minimize ![]() subject to

subject to ![]() , where

, where ![]() is a nondecreasing function, by instead minimizing

is a nondecreasing function, by instead minimizing ![]() . The primal minimizer

. The primal minimizer ![]() will remain the same for both problems. Consider minimizing

will remain the same for both problems. Consider minimizing ![]() subject to

subject to ![]() :

:

The true minimum value can be obtained by applying the function ![]() to the primal minimum value:

to the primal minimum value:

Since ![]() , an additional constraint of

, an additional constraint of ![]() must be applied:

must be applied:

The true minimum value can be obtained by applying the function ![]() to the primal minimum value:

to the primal minimum value:

Geometric Problems (6)

Find the minimum distance between two disks of radius 1 centered at (0,0) and (2,1). Using auxiliary variable ![]() , the transformed problem is to minimize

, the transformed problem is to minimize ![]() subject to

subject to ![]() :

:

Visualize the positions of the two points:

The auxiliary variable ![]() gives the distance between the points:

gives the distance between the points:

Find the minimum distance between two non-intersecting convex polygons:

Let ![]() be a point on

be a point on ![]() . Let

. Let ![]() be a point on

be a point on ![]() . The objective is to minimize

. The objective is to minimize ![]() . Using auxiliary variable

. Using auxiliary variable ![]() , the transformed objective is to minimize

, the transformed objective is to minimize ![]() subject to

subject to ![]() :

:

Visualize the position of the points ![]() and

and ![]() :

:

The auxiliary variable ![]() gives the distance between the points:

gives the distance between the points:

Find the plane that separates two non-intersecting convex polygons:

Let ![]() be a point on

be a point on ![]() . Let

. Let ![]() be a point on

be a point on ![]() . The objective is to minimize

. The objective is to minimize ![]() . Using auxiliary variable

. Using auxiliary variable ![]() , the transformed objective is to minimize

, the transformed objective is to minimize ![]() subject to

subject to ![]() :

:

According to the separating hyperplane theorem, the dual associated with the constraint ![]() will give the normal of the hyperplane:

will give the normal of the hyperplane:

The constraint ![]() internally has the form

internally has the form ![]() .

. ![]() is the only constraint containing a matrix

is the only constraint containing a matrix ![]() :

:

The hyperplane is constructed as:

Visualize the plane separating the two polygons:

Find the plane that separates two non-intersecting convex polygons:

Let ![]() be a point on

be a point on ![]() . Let

. Let ![]() be a point on

be a point on ![]() . The objective is to minimize

. The objective is to minimize ![]() . Using auxiliary variable

. Using auxiliary variable ![]() , the transformed objective is to minimize

, the transformed objective is to minimize ![]() subject to

subject to ![]() :

:

According to the separating hyperplane theorem, the dual associated with the constraint ![]() will give the normal of the hyperplane:

will give the normal of the hyperplane:

The constraint ![]() internally has the form

internally has the form ![]() .

. ![]() is the only constraint containing a matrix

is the only constraint containing a matrix ![]() :

:

The hyperplane is constructed as:

Visualize the plane separating the two polygons:

Find the center ![]() and radius

and radius ![]() of the smallest circle that encloses a set of points:

of the smallest circle that encloses a set of points:

The objective is to minimize the radius ![]() subject to the constraints

subject to the constraints ![]() :

:

The center and radius of the disk are:

The minimal enclosing disk can be found efficiently using BoundingRegion:

Find the radius ![]() and center

and center ![]() of a minimal enclosing ball that encompasses a given region:

of a minimal enclosing ball that encompasses a given region:

Minimize the radius ![]() subject to the constraints

subject to the constraints ![]() :

:

The minimal enclosing ball can be found efficiently using BoundingRegion:

Facility Location Problems (3)

A company wants to open a new factory. The factory needs raw materials from five warehouses. The transportation cost per unit distance between the new factory and warehouses is:

Let ![]() be the distance between factory and warehouses

be the distance between factory and warehouses ![]() The objective is to minimize

The objective is to minimize ![]() :

:

The five warehouses are located at:

The new factory must be located such that ![]() :

:

Find the optimal distances between the new factory and the warehouses:

The new factory location is closer to the warehouses where the transportation costs are higher:

A company wants to open a two new factories. The factories need raw materials from five warehouses. The transportation cost per unit distance between the new factories and warehouses is:

Let ![]() be the distance between factory

be the distance between factory ![]() and warehouse

and warehouse ![]() The objective is to minimize

The objective is to minimize ![]() :

:

The five warehouses are located at:

The new factories must be located such that ![]() :

:

Find the optimal distances between the new factory and the warehouses:

The new factory location is closer to the warehouses where the transportation costs are higher:

Find the minimum number and location of warehouses to construct that can supply the company's five retail stores. There are five potential sites. The cost of transporting one unit of product from a given warehouse to a given store is given by a table:

The positions of the retail stores ![]() are:

are:

The demand in the retail stores is:

For every warehouse, there is a $1000 overhead cost:

Each warehouse can hold a maximum of 500 units of product:

Let ![]() be the number of units that is transported from warehouse

be the number of units that is transported from warehouse ![]() to store

to store ![]() . The demand constraint is:

. The demand constraint is:

Let ![]() be a binary decision vector such that if

be a binary decision vector such that if ![]() , then warehouse

, then warehouse ![]() is constructed. The supply constraint is:

is constructed. The supply constraint is:

The new warehouses must be located such that ![]() :

:

The objective is to minimize the number of warehouses, the transportation cost of goods and the distance of the warehouse from the retail store:

Solve the minimum number and positions of the warehouses:

The number of units to be transported from warehouse ![]() to store

to store ![]() is:

is:

Data-Fitting Problems (6)

Find a linear fit to discrete data by minimizing ![]() subject to the constraints

subject to the constraints ![]() :

:

Using auxiliary variable ![]() , minimize

, minimize ![]() subject to

subject to ![]() :

:

Fit a fifth-order polynomial to discrete data by minimizing ![]() :

:

Construct the input data matrix using DesignMatrix:

Find the coefficients of the quadratic curve by minimizing ![]() subject to

subject to ![]() :

:

Find an approximating function to noisy data using bases ![]() such that the first and last points lie on the curve:

such that the first and last points lie on the curve:

The approximating function will be ![]() :

:

Find the coefficients of the approximating function by minimizing ![]() subject to

subject to ![]() :

:

Find a robust linear fit to discrete data by minimizing ![]() , where

, where ![]() is a known regularization parameter:

is a known regularization parameter:

Fit the line by minimizing ![]() subject to

subject to ![]() :

:

A plot of the line shows that it is robust to the outliers:

The robust ![]() fitting can also be obtained using the function Fit:

fitting can also be obtained using the function Fit:

Excess regularization will lead to the fit becoming increasingly insensitive to data:

Cardinality constrained least squares: minimize ![]() such that

such that ![]() has at most

has at most ![]() nonzero elements:

nonzero elements:

Let ![]() be a decision vector such that if

be a decision vector such that if ![]() , then

, then ![]() is nonzero. The decision constraints are:

is nonzero. The decision constraints are:

To model constraint ![]() when

when ![]() , chose a large constant

, chose a large constant ![]() such that

such that ![]() :

:

Using epigraph transformation, the objective is to minimize ![]() subject to

subject to ![]() :

:

Solve the cardinality constrained least-squares problem:

The subset selection can also be done more efficiently with Fit using ![]() regularization. First, find the range of regularization parameters that uses at most

regularization. First, find the range of regularization parameters that uses at most ![]() basis functions:

basis functions:

Find the nonzero terms in the ![]() regularized fit:

regularized fit:

Find the fit with just these basis terms:

Find the best subset of functions from a candidate set of functions to approximate given data:

The approximating function will be ![]() :

:

A maximum of 5 basis functions are to be used in the final approximation:

The coefficients associated with functions that are not chosen must be zero:

Using epigraph transformation, the objective is to minimize ![]() such that

such that ![]() :

:

Classification Problems (2)

Find a quartic polynomial ![]() that robustly separates two sets of points in the plane:

that robustly separates two sets of points in the plane:

The polynomial is defined as ![]() , with

, with ![]() :

:

For separation, set 1 must satisfy ![]() and set 2 must satisfy

and set 2 must satisfy ![]() :

:

The objective is to minimize ![]() , which gives twice the thickness between

, which gives twice the thickness between ![]() and

and ![]() . Using the auxiliary variable

. Using the auxiliary variable ![]() , the objective function is transformed to the constraint

, the objective function is transformed to the constraint ![]() :

:

Find the coefficients ![]() by minimizing

by minimizing ![]() :

:

Visualize the separation of the sets using the polynomial:

The value ![]() is the width in terms of level values of

is the width in terms of level values of ![]() , where there are no points:

, where there are no points:

Find a quartic polynomial ![]() that robustly separates two sets of points in 3D:

that robustly separates two sets of points in 3D:

The polynomial is defined as ![]() , with

, with ![]() :

:

For separation, set 1 must satisfy ![]() and set 2 must satisfy

and set 2 must satisfy ![]() :

:

The objective is to minimize ![]() . Using transformation

. Using transformation ![]() , the objective is now to minimize

, the objective is now to minimize ![]() :

:

Structural Optimization Problems (1)

Find the shape of a hanging chain formed by ![]() spring links under a vertical load at the end of each link. The objective is to find the link positions

spring links under a vertical load at the end of each link. The objective is to find the link positions ![]() :

:

The potential energy due to gravity is ![]() , where

, where ![]() is the vertical load at each end and

is the vertical load at each end and ![]() is gravity:

is gravity:

The potential energy due to the tension in the spring link due to stretching is ![]() , where

, where ![]() is the stretch in spring link

is the stretch in spring link ![]() and

and ![]() is the stiffness of the spring. Using

is the stiffness of the spring. Using ![]() , the energy is transformed to

, the energy is transformed to ![]() :

:

An additional constraint ![]() must be added due to the transformation:

must be added due to the transformation:

The ends of the linked chain are clamped at positions (0,0) and (2,-1):

Each link has to satisfy the condition ![]() , where

, where ![]() is the resting length of each spring:

is the resting length of each spring:

The final objective function is the sum of gravity and spring potential energy that must be minimized:

Find the end points of each of the spring links:

Visualize the shape of the resulting spring chain:

The stretch is greatest for the links near the ends of the link chain. Links 11 and 12 have the least elongation:

Portfolio Optimization (1)

Find the distribution of capital to invest in six stocks to maximize return while minimizing risk:

Let ![]() be the percentage of total investment made in stock

be the percentage of total investment made in stock ![]() . The return is given by

. The return is given by ![]() where

where ![]() is a vector of expected return value of each individual stock:

is a vector of expected return value of each individual stock:

The risk is given by ![]() ,

, ![]() is a risk-aversion parameter and

is a risk-aversion parameter and ![]() :

:

The objective is to maximize return while minimizing risk for a specified risk-aversion parameter:

The percentage of investment ![]() must be greater than 0 and must add to 1:

must be greater than 0 and must add to 1:

Compute the returns and corresponding risk for a range of risk-aversion parameters:

The optimal ![]() over a range of

over a range of ![]() gives an upper-bound envelope on the tradeoff between return and risk:

gives an upper-bound envelope on the tradeoff between return and risk:

Compute the percentage of investment ![]() for a specified number of risk-aversion parameters:

for a specified number of risk-aversion parameters:

Increasing the risk-aversion parameter ![]() causes the investment to be diversified:

causes the investment to be diversified:

Increasing the risk-aversion parameter ![]() causes the return on investment to decrease:

causes the return on investment to decrease:

Non-negative Matrix Factorization (1)

Investment Problems (3)

Find the number of stocks to buy from four stocks such that a minimum $1000 dividend is received and risk is minimized. The expected return value and the covariance matrix ![]() associated with the stocks are:

associated with the stocks are:

The unit price for the four stocks is $1. Each stock can be allocated a maximum of $2500:

The investment must yield a minimum of $1000:

A negative stock cannot be bought:

Using epigraph transformation, the risk, given by ![]() , is converted into a constraint:

, is converted into a constraint:

The total amount to spend on each stock is found by minimizing the risk given by:

The total investment to get a minimum of $1000 is:

Find the number of stocks to buy from four stocks with an option to short-sell such that a minimum dividend of $1000 is received and the overall risk is minimized:

The capital constraint, return on investment constraints and risk constraint are:

A short-sale option allows the stock to be sold. The optimal number of stocks to buy/short-sell is found by minimizing the risk given by the objective ![]() :

:

The second stock can be short-sold. The total investment to get a minimum of $1000 due to the short-selling is:

Without short-selling, the initial investment will be significantly more:

Find the best combination of six stocks to invest in out of a possible 20 candidate stocks, so as to maximize return while minimizing risk:

Let ![]() be the percentage of total investment made in stock

be the percentage of total investment made in stock ![]() . The return is given by

. The return is given by ![]() , where

, where ![]() is a vector of the expected return value of each individual stock:

is a vector of the expected return value of each individual stock:

The risk is given by ![]() ,

, ![]() is a risk-aversion parameter and

is a risk-aversion parameter and ![]() :

:

The objective is to maximize return while minimizing risk for a specified risk-aversion parameter:

Let ![]() be a decision vector such that if

be a decision vector such that if ![]() , then that stock is bought. Six stocks have to be chosen:

, then that stock is bought. Six stocks have to be chosen:

The percentage of investment ![]() must be greater than 0 and must add to 1:

must be greater than 0 and must add to 1:

Find the optimal combination of stocks that minimizes the risk while maximizing return:

The optimal combination of stocks is:

The percentages of investment to put into the respective stocks are:

Trajectory Optimization Problems (1)

Find the shortest path between two points while avoiding obstacles. Specify the obstacle:

Extract the half-spaces that form the convex obstacle:

Specify the start and end points of the path:

The path can be discretized using ![]() points. Let

points. Let ![]() represent the position vector:

represent the position vector:

The objective is to minimize ![]() . Using epigraph transformation, the objective can be transformed to

. Using epigraph transformation, the objective can be transformed to ![]() subject to the constraints

subject to the constraints ![]() :

:

Specify the end point constraints:

The distance between any two subsequent points should not be too large:

A point ![]() is outside the object if at least one element of

is outside the object if at least one element of ![]() is less than zero. To enforce this constraint, let

is less than zero. To enforce this constraint, let ![]() be a decision vector and

be a decision vector and ![]() be the

be the ![]()

![]() element of

element of ![]() such that

such that ![]() , then

, then ![]() and

and ![]() is large enough such that

is large enough such that ![]() :

:

Find the minimum distance path around the obstacle:

To avoid potential crossings at the edges, the region can be inflated and the problem solved again:

Properties & Relations (8)

SecondOrderConeOptimization gives the global minimum of the objective function:

Visualize the objective function:

Minimize gives global exact results for quadratic optimization problems:

NMinimize can be used to obtain approximate results using global methods:

FindMinimum can be used to obtain approximate results using local methods:

LinearOptimization is a special case of SecondOrderConeOptimization:

QuadraticOptimization is a special case of SecondOrderConeOptimization:

Use auxiliary variable ![]() and minimize

and minimize ![]() with the additional constraint

with the additional constraint ![]() :

:

SemidefiniteOptimization is a generalization of SecondOrderConeOptimization:

ConicOptimization is a generalization of SecondOrderConeOptimization:

Possible Issues (5)

Constraints specified using strict inequalities may not be satisfied for certain methods:

The reason is that SecondOrderConeOptimization solves ![]() :

:

The minimum value of an empty set or infeasible problem is defined to be ![]() :

:

The minimizer is Indeterminate:

The minimum value for an unbounded set or unbounded problem is ![]() :

:

The minimizer is Indeterminate:

Dual related solution properties for mixed-integer problems may not be available:

Constraints with complex values need to be specified using vector inequalities:

Just using Less will not work even when both sides are real in theory:

Tech Notes

Related Guides

Text

Wolfram Research (2019), SecondOrderConeOptimization, Wolfram Language function, https://reference.wolfram.com/language/ref/SecondOrderConeOptimization.html (updated 2020).

CMS

Wolfram Language. 2019. "SecondOrderConeOptimization." Wolfram Language & System Documentation Center. Wolfram Research. Last Modified 2020. https://reference.wolfram.com/language/ref/SecondOrderConeOptimization.html.

APA

Wolfram Language. (2019). SecondOrderConeOptimization. Wolfram Language & System Documentation Center. Retrieved from https://reference.wolfram.com/language/ref/SecondOrderConeOptimization.html

BibTeX

@misc{reference.wolfram_2025_secondorderconeoptimization, author="Wolfram Research", title="{SecondOrderConeOptimization}", year="2020", howpublished="\url{https://reference.wolfram.com/language/ref/SecondOrderConeOptimization.html}", note=[Accessed: 24-February-2026]}

BibLaTeX

@online{reference.wolfram_2025_secondorderconeoptimization, organization={Wolfram Research}, title={SecondOrderConeOptimization}, year={2020}, url={https://reference.wolfram.com/language/ref/SecondOrderConeOptimization.html}, note=[Accessed: 24-February-2026]}